I am floored by how it has come to pass that almost all of the 2013 new tech products get to market in the fourth quarter of 2013. For the most part, the other three quarters of the year were not wasted so much as not used to smooth supply and demand. What is to be done?

2013 products arrive in Q4

Here are some of the data points I used to conclude that 2013 is one backend-loaded product year:

- Data Center: Xeon E3-1200 v3 single-socket chips based on the Haswell architecture started shipping this month. Servers follow next quarter. Xeon E5 dual-socket chips based on Ivy Bridge announced and anticipated in shipping servers in Q4. New Avoton and Rangely Atom chips for micro-servers and storage/comms are announced and anticipated in product in Q4.

- PCs: my channel checks show 2013 Gen 4 Core (Haswell) chips in about 10% of SKUs at retail, mostly quad-core. Dual-core chips are now arriving and we’ll see lower-end Haswell notebooks and desktops arriving imminently. Apple, for instance, launched its Haswell-based 2013 iMac all-in-ones September 24th. But note the 2013 Mac Pro announced in June has not shipped and the new MacBooks are missing in action.

- Tablets: Intel’s Bay Trail Atom chips announced in June are now shipping. They’ll be married to Android or Windows 8.1, which ships in late October. Apple’s 2013 iPad products have not been announced. Android tabs this year have mostly seen software updates, not significant hardware changes.

- Phones: Apple’s new phones started selling this week. The 5C is last year’s product with a cost-reduced plastic case. The iPhone 5S is the hot product. Unless you stood all day in line last weekend, you’ll be getting your ordered phone …. in Q4. Intel’s Merrifield Atom chips for smartphones, announced in June have yet to be launched. I’m thinking Merrifield gets the spotlight at the early January ’14 CES show.

How did we get so backend loaded?

I don’t think an economics degree is needed to explain what has happened. The phenomenal unit growth over the past decade in personal computers, including mobility, have squarely placed the industry under the forces of global macro-economics. The recession in Europe, pull-back in emerging countries led by China, and slow growth in the USA all contribute to a sub-par macro-economic global economy. Unit volume growth rates have fallen.

The IT industry has reacted with slowed new product introductions in order to sell more of the existing products, which reduces the cost-per-unit of R&D and overhead of existing products. And increases profits.

Unfortunately, products are typically built to a forecast. The forecast for 2012-2013 was higher than reality. More product was built than planned or sold. There are warehouses full of last year’s technology.

The best laugh I’ve gotten in the past year from industry executives is to suggest that “I know a guy who knows a guy in New Jersey who could maybe arrange a warehouse fire.” After about a second of mental arithmetic, I usually get a broad smile back and a response like “Hypothetically, that would certainly be very helpful.” (Industry execs must think I routinely wear a wire.)

So, with warehouses full of product which will depreciate dramatically upon new technology announcements, the industry has said “Give us more time to unload the warehouses.”

Meanwhile, getting the new base technology out the door on schedule is harder, not easier. Semiconductor fabrication, new OS releases, new sensors and drivers, etc. all contribute to friction in the product development schedule. But flaws are unacceptable because of the replacement costs. For example, if a computing flaw is found in Apple’s new iOS 7, which shipped five days ago, Apple will have to fix the install on over 100 million devices and climbing — and deal with class action lawsuits and reputation damage; costs over $1 billion are the starting point.

In short, the industry has slowed its cadence over the past several years to the point where all the sizzle in the market with this year’s products happens at the year-end holidays. (Glad I’m not a Wall Street analyst.)

What happens next?

The warehouses will still be stuffed entering 2014. But there will be less 2012 tech on those shelves, now replaced by 2013 tech.

Marching soldiers are taught that when they get out of step, they skip once and get back in cadence.

The ideal consumer cadence for the IT industry has products shipping in Q2 and fully ramped by mid-Q3; that’s in time for the back-to-school major selling season, second only to the holidays. The data center cadence is more centered on a two-year cycle, while enterprise PC buying prefers predictability.

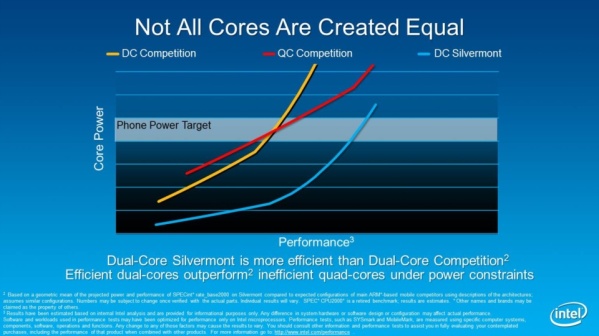

Consumer tech in 2014 broadly moves to a smaller process node and doubles up to quad-cores. Competitively, Intel is muscling its way into tablets and smartphones. The A7 processor in the new Apple iPhone 5S is Apple’s first shot in response. Intel will come back with 14nm Atoms in 2014, and Apple will have an A8.

Notebooks will see a full generation of innovation as Intel delivers 14nm chips that are on an efficiency path towards thresh-hold voltages — as low as possible — that deliver outstanding battery life. A variation on the same tech gets to Atom by 2014 holidays.

The biggest visible product changes will be in form-factors, as two-in-one notebooks in many designs compete with tablets in many sizes. The risk-averse product manufacturers (who own that product in the warehouses) have to innovate or die, macro-economic conditions be damned. Dell comes to mind.

On the software side, Apple’s IOS 7 looks and acts a lot more like Android than ever before. Who would have guessed that? Microsoft tries again with Windows version 8.1.

Consumer buyers will be information-hosed with more changes than they have seen in years, making decision-making harder.

Intel has been very cagy about what 2014 brings to desktops; another year with Haswell refreshers before a 2015 new architecture is entirely possible. Otherwise, traditional beige boxes are being replaced with all-in-ones and innovative small form-factor machines.

The data center is in step and a skip is unnecessary. The 2014 market battle will answer the question: what place do micro-servers have in the data center? However, there is too much server-supplier capacity chasing a more commodity datacenter. Reports have IBM selling off its server business, and Dell is going private to focus long-term.

The bright spot is that tech products of all classes seems to wear out after about 4-5 years, demanding replacement. Anyone still have an iPhone 3G?

The industry is likely to continue to dawdle its cycles until global macro-economic conditions improve and demand catches up with more of the supply. But moving the availability of products back even two months in the calendar would improve new-product flow-through by catching the back-to-school season.

Catch me on Twitter @peterskastner