Eighteen months ago, Intel announced it would address the world’s rapidly growing computing continuum by investing in variations on the Intel Architecture (IA). It was met with a ho-hum. Now, many product families are beginning to emerge from the development labs and head towards production. All with IA DNA, these chip families are designed to be highly competitive in literally dozens of new businesses for Intel, produced in high volumes, and delivering genuine value to customers and end users.

Intel is the only company with an architecture, cash flow, fabs, and R&D capable of scaling its computing engines up and down to meet the decade’s big market opportunities. What is Intel doing and how can they pull this off?

The 2010’s Computing Continuum

Today’s computing is a continuum that ranges from smartphones to mission-critical datacenter machines, and from desktops to automobiles. These devices represent a total addressable market (TAM) approaching a billion processors a year, and will explode to more than two billion by the end of the decade. Of that, traditional desktop microprocessors are about 175 million chips this year, and notebooks, 225 million.

For more than four decades, solving all the world’s computing opportunities required multiple computer architectures, operating systems, and applications. That is hardly efficient for the world’s economy, but putting an IBM mainframe into a cell phone wasn’t practical. So we made due with multiple architectures and inefficiencies.

In the 1990’s, I advised early adopters NCR and Sequent in their plans for Intel 486-based servers. Those were desktop PC chips harnessed into datacenter server roles. Over twenty years, Intel learned from its customers to create and improve the Xeon server family of chips, and has achieved a dominant role in datacenter servers.

Now, Intel Corporation is methodically using its world-class silicon design and fabrication capabilities to scale its industry-standard processors down to fit smartphones and embedded applications, and up into high-performance computing applications, as two examples. Scaling in other directions is still in the labs and under wraps.

The Intel Architecture (IA) Continuum

IA is Intel’s architecture and an instruction set that is common (with feature differentiation) in the Atom, Core, and Xeon microprocessors already used in the consumer electronics, desktop and notebook, and server markets, respectively. These microprocessors are able to run a common stack of software such as Java, Linux or Microsoft Windows. IA also represents the hardware foundation for hundreds of billions of dollars in software application investments by enterprise and software application package developers that remain valuable assets as long as hardware platforms can run it — and backwards compatibility in IA has protected those software investments.

To meet the widely varying requirements of this decade’s computing continuum, Intel is using the DNA of IA to create application-specific variants of its microprocessors. Think of this as silicon gene-splicing. Each variant has its own micro-architecture that is suited for its class of computing requirements (e.g., Sandy Bridge for 2011 desktops and notebooks). These genetically-related processors will extend Intel into new markets, and include instruction-set compatible microprocessors:

- Embedded processors and electronics known as “systems on a chip” (SOCs) with an Atom core and customized circuitry for controlling machines, display signage, automobiles, and industrial products;

- Atom, the general-purpose computer heart of consumer electronics mobile devices, tablets, and soon smartphones;

- Core i3, i5, and i7 processors for business and consumer desktops and notebooks, with increasing numbers of variants for form-factor, low power, and geography;

- Xeon processors for workstations and servers, with multi-processors capable advances well into the mainframe-class, mission-critical computing segment;

- Xeon datacenter infrastructure processor variants (e.g., storage systems, and with communications management a logical follow-on);

A Pause to Bring You Up To Date

Please do not be miffed: all of the above was published in February, 2011, more than two years ago. We included it here because it sets the stage for reviewing where Intel stands in delivering on its long-term strategy and plans of the IA computing continuum, and to remind readers that Intel’s strategy is hiding in plain sight for going on five years.

In that piece two years ago, we concluded that IA fits the market requirements of the vast majority of the decade’s computing work requirements, and that Intel is singularly capable of creating the products to fill the expanding needs of the computing market (e.g., many core).

With the launch of the 4th Generation Core 22nm microprocessors (code-name Haswell) this week and the announcement of the code-name Baytrail 22nm Atom systems on a chip (SoCs), it’s an appropriate time to take the pulse on Intel’s long-term stated direction and the products that map to the strategy.

Systems on a Chip (SoCs)

The Haswell/Baytrail launch would be a lot less impressive if Intel had not mastered the SoC.

The benefits of an SoC compared to the traditional multi-chip approach Intel has used up until now are: fewer components, less board space, greater integration, lower power consumption, lower production and assembly costs, and better performance. Phew! Intel could not build a competitive smartphone until it could put all of the logic for a computer onto one chip.

This week’s announcements include SoCs for low-voltage notebooks, tablets, and smartphones. The data center Atom SoCs, code-name Avoton, are expected later this year.

For the first time, Intel’s mainstream PC, data center, and mobile businesses include highly competitive SoCs.

SoCs are all about integration. The announcement last month at Intel’s annual investor meeting that “integration to innovation” was an additional strategy vector for the company hints at using many more variations of SoCs to meet Intel’s market opportunities with highly targeted SoC-based variants of Atom, Core, and Xeon.

Baytrail, The Forthcoming Atom Hero

With the SoCs for Baytrail in tablets and Merrifield in smartphones, Intel can for the first time seriously compete for mobile marketshare against ARM competitors on performance-per-watt and performance. These devices are likely to run the Windows 8, Android, and Chrome operating systems. They will be sold to carriers globally. There will be variants for local markets (i.e., China and Africa).

The smartphone and tablet markets combined exceed the PC market. By delivering competitive chips that run thousands of legacy apps, Intel has finally caught up on the technology front of the mobile business.

Along with almost the entire IT industry, Intel missed the opportunity that became the Apple iPhone. Early Atom processors were not SoCs, had poor battery life, and were relatively expensive. That’s a deep hole to climb out of. But Intel has done just that. There are a lot fewer naysayers than two years ago. The pendulum is now swinging Intel’s way on Atom. 2014 will be the year Intel starts garnering serious market share in mobile devices.

4th Generation Core for Mainstream Notebooks and PCs

Haswell is a new architecture implemented in new SoCs for long-battery-life notebooks, and with traditional chipsets for mainstream notebooks and desktops. The architecture moves the bar markedly higher in graphics performance, power management, and floating point (e.g., scientific) computations.

We are rethinking our computing model as a result of Haswell notebooks and PCs. Unless you are an intense gamer or workstation-class content producer, we think a notebook-technology device is the best solution.

Compared to four-year old notebooks in Intel’s own tests, Haswell era notebooks are: half the weight, half the height, get work done 1.8x faster, convert videos 23x faster, play popular games 26x faster, wake up and go in a few seconds, and with 3x battery life for HD movie playing. Why be tethered to a desktop?

Black, breadbox-size desktops are giving way to all-in-one (AIO) designs like the Apple iMac used to write this blog. That iMac has been running for two years at 100% CPU utilization with no problems. (It does medical research in the background folding proteins). New PC designs use notebook-like components to fit behind the screen. You’ll see AIOs this fall that lie flat as large tablets or go vertical with a rear kick-stand. With touch screen, wireless Internet and Bluetooth peripherals, these new AIOs are easily transportable around the house. That’s the way we see the mainstream desktop PC evolving.

And PCs need to evolve quickly. Sales are down almost 10% this year. One reason is global macro-economic conditions. But everybody knows the PC replacement cycle has slowed to a crawl. Intel’s challenge is to spark the PC replacement cycle. Haswell PCs and notebooks, as noted above, deliver a far superior experience to users than they are putting up with in their old, obsolescent devices.

Xeon processors for workstations, servers, storage, and communications

The data center is a very successful story for Intel. The company has steadily gained workloads from traditional (largely legacy Unix) systems; grown share in the big-ticket Top 500 high-performance computing segment; evolved with mega-datacenter customers such as Amazon, Facebook, and Google; and extended Xeon into storage and communications processors inside the datacenter.

The Haswell architecture includes two additions of great benefit to data-center computing. New floating point architecture and instructions should improve scientific and technical computing throughput by up to 60%, a huge gain over the installed server base. Second, transactional memory is a technology that makes it easier for programmers to deliver fine-grain parallelism, and hence to take advantage of multi-cores with multi-threaded programs, including making operating systems and systems software like databases run more efficiently.

In the past year, the company met one data-center threat in GPU-based computing with PHI, a server add-in card that contains dozens of IA cores that run a version of Linux to enable massively parallel processing. PHI competes with GPU-based challengers from AMD and nVidia.

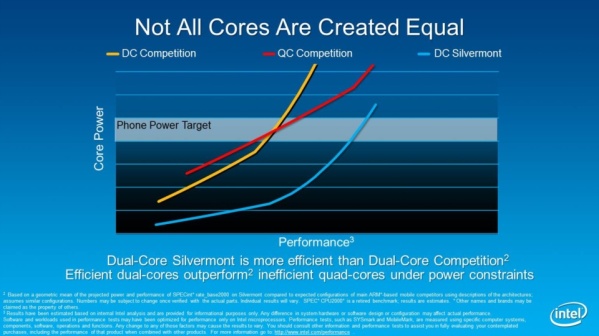

Another challenge, micro-servers, is more a vision than a market today. Nevertheless, Intel created the code-name Avoton Atom SoC for delivery later this year. Avoton will compete against emerging AMD- and ARM-based micro-server designs.

Challenges

1. The most difficult technology challenge that Intel faces this decade remains software, not hardware. Internally, the growing list of must-deliver software drivers for hardware such as processor-integrated graphics means that the rigid two-year, tick-tock hardware model must also accommodate software delivery schedules.

Externally, Intel’s full-fray assault on the mobile market requires exquisite tact in dealing with the complex relationships with key software/platform merchants: Apple (iOS), Google (Android), and Microsoft (Windows), who are tough competitors.

In the consumer space such as smartphones, Intel’s ability to deliver applications and a winning user experience are limited by the company’s OEM distribution model. More emphasis needs to be placed on the end-user application ecosystem, both quality and quantity. We’re thinking more reference platform than reference hardware.

2. By the end of the decade, silicon fabrication will be under 10 nm, and it is a lot less clear how Moore’s Law will perform in the 2020’s. Nevertheless, we are optimistic about the next 10-12 years.

3. The company missed the coming iPhone and lost out on a lot of market potential. That can’t happen again. The company last month set up an new emerging devices division charged with finding the next best thing around the same time others do.

4. In the past, we’ve believed that mobile devices — tablets and smartphones — were additive to PCs and notebooks, not substitutional. The new generation of Haswell and Baytrail mobile devices, especially when running Microsoft Windows, offer the best of the portable/consumption world together with the performance and application software (i.e., Microsoft Office) to produce content and data. Can Intel optimize the market around this pivot point?

Observations and Conclusions

Our summary observations have not changed in two years, and are reinforced by the Haswell/Baytrail SoCs that are this week’s proof point:

- Intel is taking its proven IA platforms and modifying them to scale competitively as existing markets evolve and as new markets such as smartphones emerge.

- IA scales from handhelds to mission-critical enterprise applications, all able to benefit from a common set of software development tools and protecting the vast majority of the world’s software investments. Moreover, IA and Intel itself are evolving to specifically meet the needs of a spectrum of computing made personal, the idea that a person will have multiple computing devices that match the time, place and needs of the user.

- Intel is the only company with an architecture, cash flow, fabs, and R&D capable of scaling its computing engines up and down to meet the decade’s big market opportunities.

Looking forward, Intel has fewer and less critical technology challenges than at any point since the iPhone launch in 2007. Instead, the company’s largely engineering-oriented talent must help the world through a complex market-development challenge as we all sort out what devices are best suited for what tasks. We’ve only scratched the surface of convertible tablet/notebook designs. How can Intel help consumers decide what they want and need so the industry can make them profitably? How fast can Intel help the market to make up its mind? Perhaps the “integration to innovation” initiative needs a marketing component.

If the three-year evolving Ultrabook campaign is an example of how Intel can change consumer tastes, then we think industry progress will be slower than optimal. A “win the hearts and minds” campaign is needed, learning from the lessons of the Ultrabook evolution. It will take skillsets in influencing and moving markets in ways Intel will need more of as personal computing changes over the next decade, for example, as perceptual computing morphs the user interface.

Absent a macro-economic melt-down, Intel is highly likely to enjoy the fruits of five years of investments over the coming two-year life of the Haswell architecture. And there’s no pressing need today to focus beyond 2015.

Biography

Peter S. Kastner is an industry analyst with over forty-five years experience in application development, datacenter operations, computer industry marketing, PCs, and market research. He was a co-founder of industry-watcher Aberdeen Group in 1989. His firm, Scott-Page LLC, consults with technology companies and technology users.

Twitter: @peterskastner